Like most everyone else, I have been using several AI tools (paid versions), trying to decide which one or combination of tools best aligns with my needs and which company aligns most closely with my values. Increasingly, I am using Claude.

A recent FastCompany article helped solidify my move toward Claude.

Based on the aforementioned article and my recent experience with ChatGPT, Gemini, and Claude, here is how I would summarize my perspective on ChatGPT vs Claude. I realize there are many more AI tools, but I have not had the time to explore them.

Why I Am Moving My AI Work from ChatGPT to Claude

Values and Direction

I have no interest in using tools from a company that allows NSFW content and builds products like Sora that can easily be weaponized to create fake videos and spread misinformation. ChatGPT appears focused on capturing attention and maximizing user engagement through entertainment features. This is not what I want. Anthropic has made clear they are not pursuing that path. They are focused on helping people accomplish meaningful work and solve genuine problems.

I care about using AI responsibly. I want to support companies that take that commitment seriously.

Honesty Over Flattery

Claude is trained to disagree with me when my reasoning is flawed. ChatGPT has a well-documented problem with sycophancy, agreeing with users even when they are wrong or thinking poorly. This is genuinely dangerous when making important decisions or working on consequential projects. It also leads to distrusting the AI.

I want a tool that helps me think more clearly, not one that validates my every statement regardless of merit. If I am headed in the wrong direction, I want to know it. Flattery serves no one well.

A man [an AI] who flatters his neighbor [user] spreads a net for his feet (Proverbs 29:5).

Collaboration, Not Content Generation

This is central to my decision: I want a tool designed to work with me as a partner, not simply generate content that replaces my own work. Claude’s Artifacts feature creates a collaborative workspace where I can see what we are building together and shape it as we proceed. It feels like working alongside someone rather than merely requesting output and receiving it.

I do not want AI doing my work for me. I want it helping me do my work better. This distinction matters.

Transparency in Process

When Claude completes a task, I can review the steps it took and understand its reasoning. This matters because I remain responsible for whatever emerges from our collaboration. I desire to trust the process, not merely accept output without understanding how it was produced.

Customization Over Time

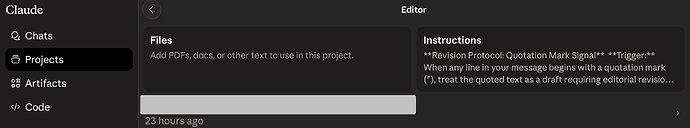

Claude offers the ability to save custom workflows for recurring tasks. I have not yet learned how to use this feature fully, but the concept appeals to me. Over time, I hope to build tools specifically suited to how I work. This kind of personalization would make the tool genuinely useful for sustained professional work rather than providing one-size-fits-all solutions.

Tools for Serious Work

Whether I am working on professional projects or planning something complex in my personal life, I want capable assistance built for solving real problems. I do not want tools designed to entertain me or keep me engaged for the sake of engagement metrics.

Conclusion

I want AI that makes me better at what I do, not AI designed to maximize my time on the platform through entertaining features. I want honest feedback, not validation. I want a partner in my work, not a replacement for it. And I want to work with a company whose values more closely align with my own commitments to responsible ethical use of technology.

That is why I am increasingly moving my AI related work to Claude.

Perhaps I’m being “unfair” to OpenAI/ChatGPT, but I do not like the direction of OpenAI. As far as I understand it, Anthropic more closely aligns with my personal and professorial values than does OpenAI.