Seems to me it is consistent with Anthropic’s documentation

I like “language without thought” as shorthand.

LLMs don’t “write” in the sense that humans write, they string together predictive, semantically correct text.

“Because ChatGPT cannot write. Generating syntax is not the same thing as writing. Writing is an embodied act of thinking and feeling. Writing is communicating with intention. Yes, the existence of a product at the end of the process is an indicator that writing has happened, but by itself, it does not define what writing is or what it means to the writer or the audience for that writing.” (John Warner, More Than Words)

“Large language models do not “write.” They generate syntax. They do not think, feel, or experience anything. They are fundamentally incapable of judging truth, accuracy, or veracity. Any actions that look like the exercise of judgment are illusory.” (John Warner, More Than Words)

“What ChatGPT and other large language models are doing is not writing and shouldn’t be considered as such. Writing is thinking. Writing involves both the expression and exploration of an idea, meaning that even as we’re trying to capture the idea on the page, the idea may change based on our attempts to capture it. Removing thinking from writing renders an act not writing.” (John Warner, More Than Words)

So, just to test what Claude could digest, I created a project named “Theories of Photography” and uploaded an ePub version of a book that contains both text and images and is about 120 pages long in its print version. The total file size is about 16MB. I then fed it the prompt I included in my earlier post. Claude chugged away for about 3 minutes, and produced the requested summary, plus the keywords, figures referenced, and suggested related texts, and some next steps if I wanted to continue to work with Claude to expand the analysis.

I then added a 70 page PDF to the project and used the same prompt. No problem— it generated the summary pronto.

I’m not sure why it choked on your PDF!

I use ChatGPT-powered CoPilot at work and use Claude for other things. Lately, I have been struggling to get CoPilot to do the whole job. I have two examples. The first was about 35 paragraphs of descriptions of various technologies. I asked CoPilot to rewrite the descripitions in contractual language, e.g. CONTRACTOR shall provide… CoPilot did 4 paragraphs, then asked if I wanted it to do the rest. I said yes and it did the same four paragraphs again and repeated its question. I had the same experience when asking it to convert traditional Chinese characters to pinyin (a way of writing chinese words with latin alphabet). It just would not do the whole text, which was only about 5 pages long.

On the other hand, when I asked Claude to convert the Chinese characters to pinyin, it did everything except for the last paragraph. It stopped mid sentence so I assume it just ran out of context window. I gave it the last paragraph and was done very quickly.

I am not sure whether CoPilot’s refusal to do the whole job is due to ChatGPT or Microsoft’s CoPilot wrapper, but I really wish I could use Claude for my work. I spent about 30 minutes trying to finish my task with CoPilot. I was done in 10 minutes with Claude.

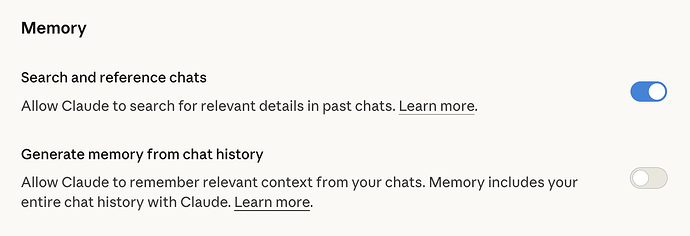

Search and reference chats is basically a search engine for your old chats. Claude will search them if you ask it to, or it thinks it might help to answer. E.g., “find that recipe you gave me for apple pie.”

Memory is a summary of key information from past chats that Anthropic generates and injects into all of your Claude conversations. E.g., it might generate “favorite band: Radiohead” from a longer conversation.

Ah! Thanks!

20 characters

After reading this thread and the articles that @Bmosbacker posted, excited to move to Claude from ChatGPT and have found it to be more insightful. I also think the artifacts are very useful (as opposed to having to search through old chats).

The downside has been running up against token limits (on the $20/month plan) VERY quickly. I’m not using it for coding, just editing, iterating documents and web content, and strategy. As much as I appreciate the opportunity to take a break and touch grass, it is sometimes annoying. I never had this issue with ChatGPT even with heavy use.

I don’t believe I’ve run across any token limits, although to be frank, I’m not sure I’d recognize it if I did. That said, I do find Claude slower than ChatGPT, and occasionally I get a timeout error. But I’m willing to live with that considering the options.

You would notice! It stops working until the tokens refresh. (Tokens are simply units of work). I do have a project with some uploaded resources (files) in it for reference. Wondering if it has been inefficient in terms of reviewing them for every interaction with chats in the project.

You might find this intriguing. Several days ago I asked Claude:

I have a question. Your responses are good but very slow compared to the responses from ChatGPT 5.1, which are very fast. Why is that?

Here is the response:

“Based on the research, the speed difference you’re experiencing between Claude Sonnet 4.5 and ChatGPT comes down to fundamental architectural design choices.

Claude Sonnet 4.5 generates responses at approximately 63 tokens per second with a first response latency of about 1.80 seconds . This represents solid performance for a frontier model, but there are several factors that influence the perceived speed:

Architecture and Design Philosophy

Claude Sonnet 4.5 operates as both a standard model and a hybrid reasoning model, allowing users to choose between near-instant responses and extended, step-by-step thinking . When handling complex queries—particularly those involving lengthy context, detailed analysis, or multi-step reasoning—Claude may deliberately slow its response to ensure accuracy and thoroughness.

Context Processing

Research shows that for Claude models, latency increases with input length—for every additional 500 input tokens, latency increases by approximately 0.53 seconds for Claude 2 . Given your work with lengthy documents, book chapters, and theological content, this factor compounds when Claude processes substantial context.

Quality-Speed Tradeoff

Claude Sonnet 4.5 demonstrates more stability and predictability across tasks without requiring special configuration, whereas GPT models show wider performance variance between standard and reasoning-enabled modes . Claude prioritizes consistency and reliability over raw speed—a design choice that aligns with Anthropic’s safety-focused philosophy.

For your writing workflow involving theological precision, formal tone preservation, and complex editorial tasks, Claude’s deliberate approach may actually serve your needs better than faster but potentially less consistent alternatives, even if the response time feels slower.”

Thoughts on that response?

@drfierce I ran out of tokens quite quickly when I used the Opus 4.1 model instead of the default Sonnet 4.5. Which model had you selected?

With Opus it does go very quickly. I’m not that surprised about that because I was asking you to do some CSS for my website.

But with Sonnet, I was still bumping up against the limit on occasion. Since the token seemed to refresh every five hours, I’ve gotten the habit of asking. “hard” to tee up the next work session say, the night before,

I’ve never used Opus. I occasionally bump into timeouts with Sonnet as others described. Most of mine come from agent enabled coding.

Certainly interesting but as a non expert hard to know if they are trying to turn a bug (we use a lot of tokens!) to feature (we are providing better answers). Probably a little Column A and a little Column B. Overall very impressed with Claude, specifically some strategic push back on a project when it told me that what I was thinking of working on was a waste of time until other priorities are addressed. And it was right!

ChatGPT always was a little too easy to please and would never provide feedback like this.

Indeed, because I told Claude to never seek to flatter or please, sometimes it gets snippy when I keep pressing on an issue that it believes it has already adequately answered. I rather appreciate that! ![]()

ChatGPT is so … needy. It keeps pleading with me to let it do more things. “No! Don’t Go! Would you like a shorter teaching-ready summary, a slide deck, a comparison chart of the two books, or a thematic analysis focusing on particular images?”

I assume it’s been tuned this way for the purpose of building engagement.

It feels very disconcerting as, like everything, it’s simply meant to be a tool. The playing on human emotions so blatantly feels wrong.

ChatGPT is so … needy

After seeing these LLMs fail at their assigned task I’ve typed “I give up”, and they try to please me, they continue to solve the issue — of course, failing at it

Probably already been mentioned elsewhere, but with the huge effort underway at all AI companies to embed their chatbots with ads and shopping including direct affiliate links, no surprise they continue to ramp up the addictive “stay around, we love you” nature of their interactions.

It was inevitable that AI would follow the rest of the Internet towards maximum ensh*tification, I think the suprise is that would attempt to accomplish this with maximum brown-nosing the users themselves?